To Get the Best Look at a Person's Face, Look Just Below the Eyes, According to UCSB Researchers

They say that the eyes are the windows to the soul. However, to get a real idea of what a person is up to, according to UC Santa Barbara researchers Miguel Eckstein and Matt Peterson, the best place to check is right below the eyes. Their findings are published in the Proceedings of the National Academy of Science.

"It's pretty fast, it's effortless –– we're not really aware of what we're doing," said Miguel Eckstein, professor of psychology in the Department of Psychological & Brain Sciences. Using an eye tracker and more than 100 photos of faces and participants, Eckstein and graduate research assistant Peterson followed the gaze of the experiment's participants to determine where they look in the first crucial moment of identifying a person's identity, gender, and emotional state.

"For the majority of people, the first place we look at is somewhere in the middle, just below the eyes," Eckstein said. One possible reason could be that we are trained from youth to look there, because it's polite in some cultures. Or, because it allows us to figure out where the person's attention is focused.

However, Peterson and Eckstein hypothesize that, despite the ever-so-brief –– 250 millisecond –– glance, the relatively featureless point of focus, and the fact that we're usually unaware that we're doing it, the brain is actually using sophisticated computations to plan an eye movement that ensures the highest accuracy in tasks that are evolutionarily important in determining flight, fight, or love at first sight.

"When you look at a scene, or at a person's face, you're not just using information right in front of you," said Peterson. The place where one's glance is aimed is the place that corresponds to the highest resolution in the eye –– the fovea, a slight depression in the retina at the back of the eye –– while regions surrounding the foveal area –– the periphery –– allow access to less spatial detail.

However, according to Peterson, at a conversational distance, faces tend to span a larger area of the visual field. There is information to be gleaned, not just from the face's eyes, but also from features like the nose or the mouth. But when participants were directed to try to determine the identity, gender, and emotion of people in the photos by looking elsewhere –– the forehead, the mouth, for instance –– they did not perform as well as they would have by looking close to the eyes.

Using a sophisticated algorithm, which mimics the varying spatial detail of human processing across the visual field and integrates all information to make decisions, allowed Peterson and Eckstein to predict what would be the best place within the faces to look for each of these perceptual tasks. They found that these predicted places varied moderately across tasks, and closely corresponded to where humans actually do look.

At least for the three important tasks investigated –– identity, emotion, and gender –– below the eyes is the optimal place to look, say the scientists, because it allows one to read information from as many features of the face as possible.

"What the visual system is adept at doing is taking all those pieces of information from your face and combining them in a statistical manner to make a judgment about whatever task you're doing," said Eckstein. The area around the eyes contains minute bits of important information, which require the high resolution processing close to the fovea, whereas features like the mouth are larger and can be read without a direct gaze.

The study shows that the ability to learn optimal rapid eye movement for evolutionarily important perceptual tasks is inherent in humans; however, say the scientists, it is not necessarily consistent behavior for everybody. Eckstein's lab is currently involved in studying a small subset of people who do not look just below the eyes to identify a person. Other researchers have shown that East Asians, for instance, tend look lower on the face when identifying a person's face.

The research by Peterson and Eckstein has resulted in sophisticated new algorithms to model optimal gaze patterns when looking at faces. The algorithms could potentially be used to provide insight into conditions like schizophrenia and autism, which are associated with uncommon gaze patterns, or prosopagnosia –– an inability to recognize someone by his or her face.

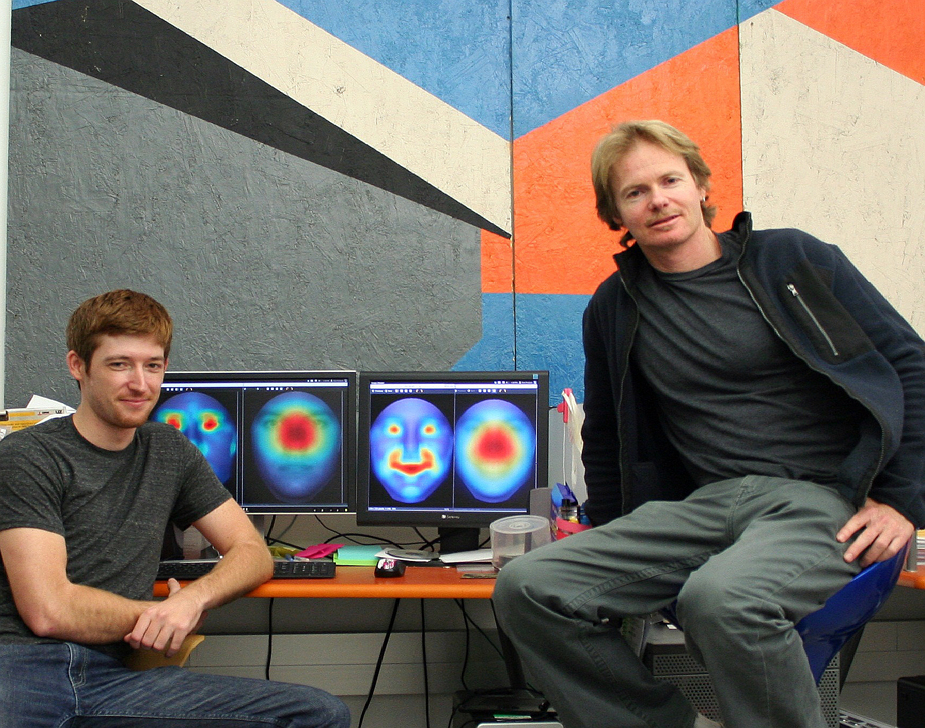

† Top image: Graduate student Matt Peterson (left) and Professor Eckstein (right) at the Vision & Image Understanding (VIU Laboratory. Images displayed on computer displays are predictions of computational models tested in the PNAS paper: 2nd and 4th images from the left correspond to the optimal gaze model that best predicts (darker red areas) where a majority of humans look at. In the background: Art by Santa Barbara artist Zack Paul in display at VIU lab.

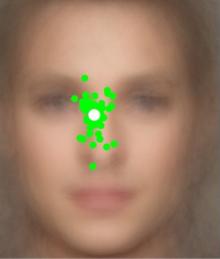

†† Middle image: Places within face (green circles) where, on average, each of 50 participants first looked at when trying to identify faces of famous people.

White circle corresponds to the average across all participants. Background is an averaged face across 120 celebrity faces.

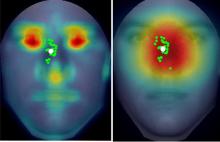

††† Bottom image: Left: Predictions of a model which looks at the most informative features in the display for a person identification task (darker red areas at the center of the eyes are the predicted looking location); Right:

Prediction of an optimal gaze model that takes into account the varying spatial detail of human visual processing across the visual field

(darker red areas are the predicted looking location).

Green circles are where, on average, each of the participants first looked at when identifying the face. White circle corresponds to the average across all participants.

Related Links

Department of Psychological & Brain Sciences

Miguel Eckstein